What happens when a college student builds an undetectable AI tool to outsmart the one thing every tech applicant dreads? That’s exactly what Chungin “Roy” Lee, a 21-year-old computer science major at Columbia University, set out to discover. Frustrated by what he described as a broken hiring system, Roy built Interview Coder—an AI tool for tech interviews designed to give candidates an unfair edge. And it worked—until it didn’t.

The AI Tool That Beat the System—Until It Got Him Expelled

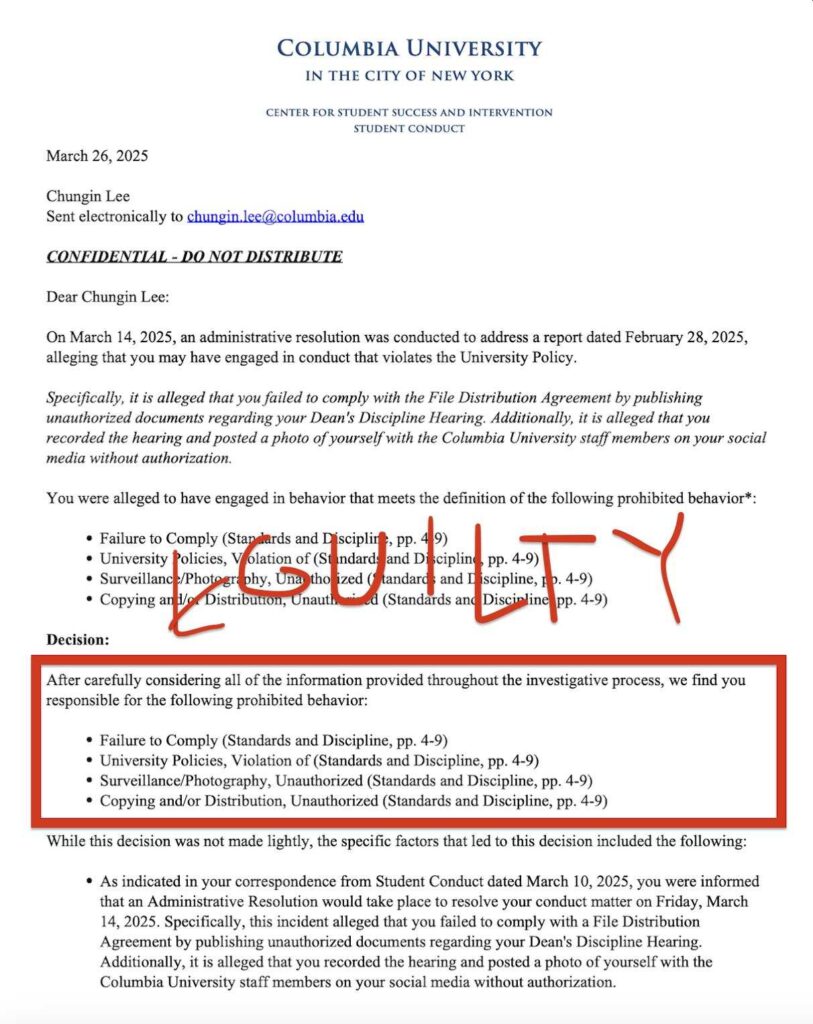

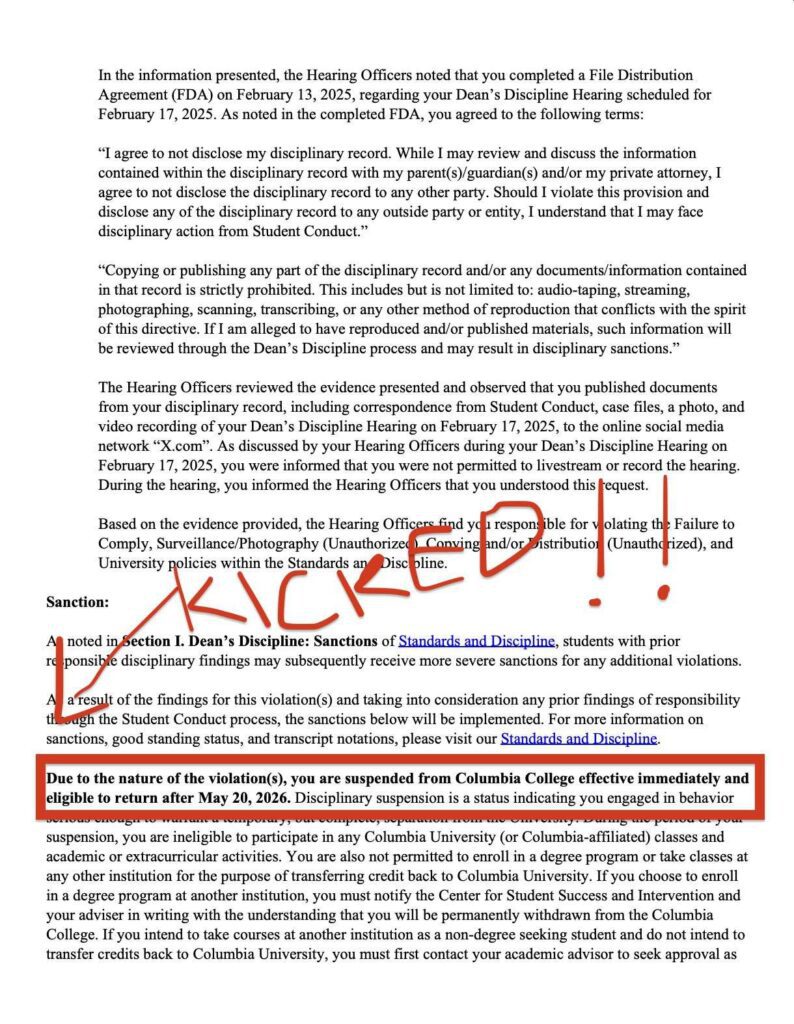

Roy wasn’t just complaining about the infamous LeetCode grind. He took action. In just one week, he created Interview Coder—an overlay that silently fed live answers to candidates during interviews on platforms like Zoom and HackerRank. Written in JavaScript, the tool bypassed detection software and mimicked real-time problem solving. But yesterday, Columbia University made its decision: Roy has been expelled.

From Student Hacker to Startup Controversy

Not only did Roy build the AI tool—he used it himself. And it worked. He landed interview offers from Amazon, Meta, TikTok, and Capital One. Then, in a bold move, he uploaded a YouTube video revealing the entire process—how Interview Coder functioned, how he used it to cheat, and how shockingly easy it was to game the system.

The video went viral. And that’s when the backlash began.

Amazon tried to erase the video and reported him to Columbia. The university initially suspended him. After Roy publicly shared screenshots of his disciplinary hearing and even leaked faculty photos, that suspension turned into a full expulsion.

How Interview Coder Actually Worked

Interview Coder wasn’t some basic script—it was a sophisticated AI tool for tech interviews. Priced at $60/month, it was reportedly on track to generate over $2 million in revenue. The software read technical questions in real time, sent them through a large language model (LLM), and returned answers in a subtle, human-like format. The tool even adjusted its window position to align with the user’s eye movement, reducing suspicion during live interviews.

And Roy didn’t try to hide what he’d done. On X (formerly Twitter), he boasted, “I got offers from the big dogs. And I built this in a week.”

The Broken LeetCode Interview Culture

Roy’s actions struck a nerve with many in tech. Anyone who’s ever prepped for a software engineering job knows the grind—hundreds of LeetCode problems that barely reflect the actual work. Roy believed that system was broken.

“I spent 600 hours practicing LeetCode, and it made me miserable,” he told CNBC. “The point of the software is to hopefully bring an end to LeetCode interviews.”

To him, Interview Coder wasn’t just a cheat code. It was a protest against an outdated system.

Tech Industry Pushback Was Immediate

Amazon called Roy out for cheating and reselling the tool. Meta and TikTok revoked his offers. Even Capital One withdrew its interest. But Roy seemed unfazed. On social media, he responded: “Amazon execs r so mad LOLLL maybe stop asking dumb interview questions and people wouldn’t build shit like this.”

After the Columbia hearing went public, Roy was accused of violating academic integrity policies. Once he posted internal documents and staff pictures online, the university made the decision to expel him permanently.

In Roy’s words: “I just got kicked out of Columbia for taking a stand against Leetcode interviews.”

Online Reactions Split Between Hero and Hacker

Online, reactions were mixed. Some hailed Roy as a modern-day Robin Hood, exposing the tech industry’s outdated and unrealistic hiring processes. Others called him exactly what Columbia did—a cheater.

“You are legendary,” one person posted. “Not because I want to cheat, but because I’ve wanted to destroy LeetCode interviews for years.”

But some warned of the unintended consequences: “Cheating on digital interviews will just force companies back to in-person stuff—worse for workers.”

Silicon Valley’s Alarming Response to AI Cheating

Roy’s experiment sent a ripple through Silicon Valley. Google CEO Sundar Pichai reportedly floated the idea of bringing back in-person interviews to avoid AI cheating. Amazon now requires candidates to confirm they aren’t using external tools. Meanwhile, Deloitte and Anthropic have already dropped remote tech interviews altogether.

This raises a larger question: if an AI tool can ace your interview process, are you really assessing human ability—or just measuring pattern memorization?

What’s Next for Roy?

Despite losing his Ivy League spot and burning bridges with Big Tech, Roy isn’t backing down. He’s headed to San Francisco to grow his startup.

“I don’t feel guilty,” he told CNBC. “If there are better tools, it’s their fault for not adapting.”

Columbia has not issued a public comment, but a photo of Roy’s official expulsion letter is already making the rounds online.

Some critics call him a scammer. Others see a visionary founder unafraid to call out the flaws in a deeply entrenched system. Either way, Roy’s message is clear—and the industry is paying attention.

As he boldly posted: “LOL. no one is safe.”

And maybe he’s right.